Exploring UX metrics for AI

I really enjoyed a lecture on UX metrics for AI with Jared Spool yesterday, as part of the Leaders of Awesomeness (https://centercentre.com) lecture series. If you haven't yet joined this community, do! It's a global community of UX leaders gathering to share knowledge and learn together.

Jared has a knack of simplifying the complex, and he often draws out his thoughts, which is super helpful for a visual learner like me. Who doesn't like a good graph? He also provides great notes, which I'm quoting here.

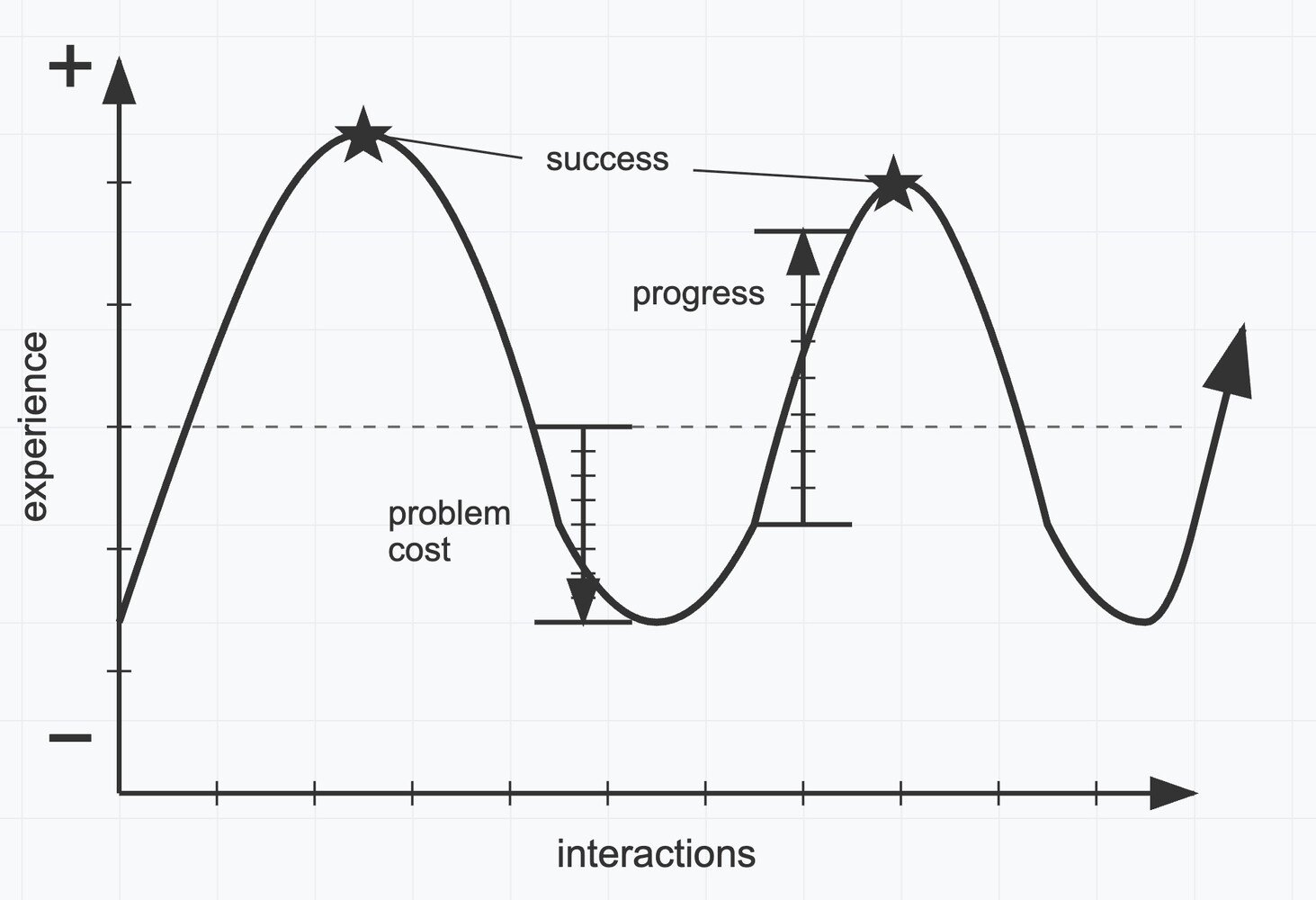

Jared set the stage by explaining that metrics tell us how our experiences are improving or not. He then drew a simple journey graph showing how interaction over time can range from frustration to delight. I redrew this graph today with Claude (https://claude.ai/new), which was hella fun!

I redrew this graph with Claude by uploading a sketch and prompt editing. Claude did a great job redrawing this graph from my sketch, though there were many more peaks and valleys in that experience for me!

Success metrics measure moments when we have achieved our interaction goal with the AI.

Progress metrics measure the improvements we make in the experience to make the interaction successful.

Problem cost metrics measure the value loss for a person or business due to poor UX. You can see how the cost metrics relate to the progress metrics, how they build the backlog of pain points to be resolved.

Jared discussed how we have high expectations for AI at the moment, and outlines what he sees are the big issues that are standing in the way of these hopes. I can contextualize these with my Claude redrawing experience.

Not accurate enough - The first trace of my drawing was close but not 100% accurate. I had to proceed with many prompt edits to revise the drawing to my liking.

Not deterministic enough - Google says that deterministic systems are those that always produce the same output given the same input. Certainly there were variances in responses that I had to account for.

Process for reasoning isn’t transparent enough - Claude actually did a great job of explaining their reasoning, and I was able to mold my prompts with his explanations to get more accurate results. For instance, it was really helpful when Claude started sharing the x,y coordinate values with me.

Prompt language and interactions are too complicated for people to use - I leaned on my design and data viz knowledge to craft prompts that Clause could carry out. I can see how this might be harder for someone without these skills.

LLM business models are currently being subsidized - I had to move to a Pro subscription halfway through as I burned through my prompt credits. Relatively inexpensive, but thinking about how this scales over larger more complex jobs.

Too many issues with property rights - really hope Jared doesn't mind me redrawing his ideas!

Too much energy is required for model usage - I spent quite a bit of time prompting, and it definitely was on my mind. Hoping that all this training we are putting in will result in a lighter impact.

I learned a bunch yesterday, and I'm excited about the extended course on this subject that Center Center (https://centercentre.com) will be offering soon. Hope to see you there! Let's learn together!